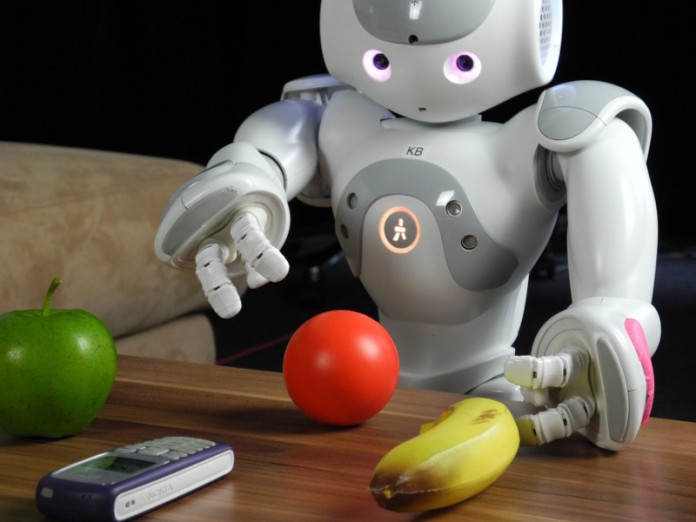

Scanning several videos on the same how-to matter, a computer inside a robot finds instructions they’ve in common and combines them into one step-by-step series.

If you hire new employees you may sit them down to look at an instructional video on how you can do the job. What would happens if you bought a new robot?

Cornell researchers are teaching robots to watch educational videos and derive a sequence of step-by-step directions to carry out an activity. You won’t even have to turn on the DVD player; the robot can lookup what it wants on YouTube. The work is geared towards a future when we might have “personal robots” to carry out everyday tasts – feeding the cat, washing dishes, cooking, doing the laundry – in addition to helping the aged and other people with disabilities.

The researchers named their project ”RoboWatch.” A part of what makes it possible is that there’s a common underlying structure to most how-to movies. And, there is plenty of source materials out there. YouTube has more than 180,000 clips on “How to make an omelet” and 809,000 on “ how to tie a tie.” By scanning a number of videos on the same activity, a computer can discover what all of them have in common and reduce that to easy step-by-step directions in natural language.

Why do people publish all these videos? “Maybe to assist individuals or perhaps simply to show off,” stated graduate student Ozan Sener, lead author of a paper on the video parsing methodology presented on the 16th of December at the International Conference on Computer Vision in Santiago, Chile. Sener collaborated with colleagues at Stanford University, where he’s presently a visiting researcher.

A key feature of their system, Sener identified, is that it’s “unsupervised.” In most previous work, robot learning is achieved by having a human explain what the robot is observing – for instance, teaching a robot to recognize objects by displaying it photos of the objects while a human labels them by name. Here, a robot with a job to do can lookup the directions and figure them out for itself.

Faced with an unfamiliar task, the robot’s computer mind begins by sending a question to YouTube to find a collection of how-to videos on the subject. The algorithm includes routines to omit “outliers” – videos that match the keywords but aren’t instructional; a question about cooking, for instance, may bring up clips from the animated feature Ratatoullie, advertisements for cooking utensils or some old Three Stooges routines.

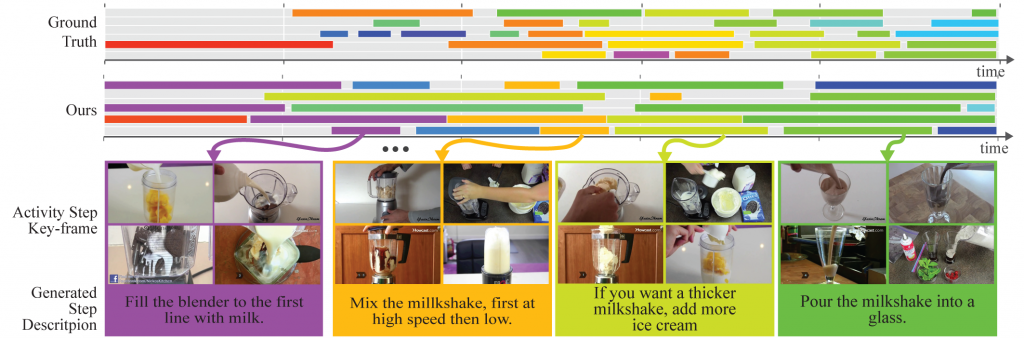

The computer scans the clips frame by frame, searching for objects that appear often, and reads the accompanying narration – utilizing subtitles – looking for frequently repeated phrases. Using these markers it matches similar segments in the numerous videos and orders them into a single sequence. From the subtitles of that sequence it will produce written directions. In other research, robots have learned to carry out duties by listening to verbal directions from a human. In the future, data from other sources such as Wikipedia may be added.

The learned knowledge from the YouTube videos is made accessible through RoboBrain, an online knowledge base robots anyplace can consult to help them do their jobs.

The research is supported in part by the Office of Naval Research and a Google Research Award.

[…] Source: AI: Robots that can learn by viewing ‘How-To’ videos […]

[…] Source: AI: Robots that can learn by viewing ‘How-To’ videos […]

[…] Source: AI: Robots that can learn by viewing ‘How-To’ videos […]

[…] Source: AI: Robots that can learn by viewing ‘How-To’ videos […]

[…] to recognize objects by displaying it photos of the objects while a human labels them by name. [6] The work is geared towards a future when we might have “personal robots” to carry out […]